Committing a crime against humanity. One ChatGPT prompt at a time.

Sparse thoughts on a sparse topic of LLMs, ChatGPT and human stupidity.

“Fake realities will create fake humans. Or, fake humans will generate fake realities and then sell them to other humans, turning them, eventually, into forgeries of themselves.”

― Philip K. Dick, I Hope I Shall Arrive Soon

“a society is a system in which all parts are interrelated, and you can’t permanently change any important part without changing all other parts as well.”

― Theodore J. Kaczynski, The Unabomber Manifesto: A Brilliant Madman's Essay on Technology, Society, and the Future of Humanity

“This important point that we have just illustrated with the case of motorized transport: When a new item of technology is introduced as an option that an individual can accept or not as he chooses, it does not necessarily REMAIN optional. In many cases the new technology changes society in such a way that people eventually find themselves FORCED to use it.”

― Theodore J. Kaczynski, Industrial Society and Its Future

“What the large language models are good at is saying what an answer should sound like, which is different from what an answer should be.”

—Rodney Brooks

The culture of exploration perished into the abyss, if there was one, and all is left is a group of leather sacks carrying computers turned entertainment clients in their pockets, unable to build, unable to question and think.

And while I am generally welcoming technology if it brings us somewhere, our recent years got me quite morose about our future, I don’t think I see it.

You know how they say we had a dream of new frontiers back in the 50s, so everyone was excited about the technology world waiting for us? The dream is dead now, and you and I overlooked the ceremony.

I had a discussion or two with a good friend of mine who didn’t understand the argument I borrowed from Gibson, that we stopped dreaming in general.

He claimed (Sergio, hi) that we didn’t stop dreaming, we just got a better understanding of our real selves and the things we are capable of.

We happen to understand our bodies, our place in this universe and there is no more place for questionable insane fables we painted for ourselves.

Instead of spaceships, colonies on Mars and Moon, superscreens in our hands and many other surreal things we have awaited to arrive back in the 50s, today there is no expectation of anything good coming anytime soon.

And the argument that we are more ‘down to Earth’ these days doesn’t stand either, otherwise we would direct all the resources to ensure we actually collectively survive here.

But we do little to improve or even sustain our species in the near future.

And today’s post is loosely related to all this (you can leave now, thanks).

While I was sceptical about all the scams we have seen with Bitcoin, Ethereum, poocoins, and blockchain, all these, being terribly expensive and taking a huge toll on our planetary health, were relatively safe for the human mind. I mean who cared that Snoop’s bought some virtual property in the metaverse and FTX’s lawyer’s address was in the metaverse (two separate metaverses here, mind you).

Yes, some of us believed there will be an extension of this world with virtual currency and smart bots running all around us doing the daily chores (the metaverse was a utopian world in sci-fi we mistook for an ideal future).

But all that ended up burning one’s cash with little to no consequence, virtual coins we haven’t seen.

Except for those fragile minds jumping out their windows after seeing the Bitcoin prices plummet.

But these days I am terrified beyond belief.

I am inconceivably mad too.

And everyone claims that ChatGPT and LLMs are something we, humanity, are bound to benefit from. And in this post, I will try to raise all the issues I have with the state of things the way I see it.

Disclaimer: I am a self-proclaimed sofa philosopher. In no way am I able to comprehend the world of LLMs and machine learning in general.

So, here it comes, the top reasons I claim our society’s future will be damaged by the use of LLMs and generative “AI” as everyone calls it:

1) Misinformation and manipulation on a planetary scale

Last week I spent 3 hours watching Lex talking to Andreessen.

The moment they described Chinese ChatGPT passing the exam questions regarding communism and Mao I smiled like an idiot. Given the closed nature of the internet in the PRC and their stupid (but existent) algorithms, they won’t have to keep their wumao army anymore. Or even better, the trolls would get more productive.

Wuhan lab leak? One thousand articles go live in 1 hour on the achievements in agriculture and the Chinese corn-based syrop killing the virus in 5 minutes.

The amount of fresh content arriving is so huge that the real news posted by unlucky spectators is getting suppressed automatically, while the algorithms seamlessly push the content marked as ‘party approved’ above the fold in everyone’s WeChat feed (in the meantime a special team of faceless lurkers clears up all devices remotely “owned” by unlucky whistleblowers who are trying to push the real news onto others).

Mind you, I understand many countries (Russia for example) already block everything you can think of, but they are not able to change the narrative by altering the narrative in thousands of new outlets simultaneously. Their actions are based on many moving pieces consisting of humans on their payroll. These people can’t write 10000 articles in 3 hours, can’t make them sound plausible and follow that one narrative line.

With programmatic content that won’t be an issue soon.

2) Resources spent nowhere

There was a leak Sergio’s shared with me re cost of training the ChatGPT. One hundred million dollars and it is a somewhat conservative estimation. Apparently, the uptime costs around 700k a day.

The whole thing trained for months on everything they have scraped to do what exactly… to make you write articles no one will read?

To make you ‘produce’ content? Again not for reading but for search engines that happen to have a hard time distinguishing real native-language content and generated bullshit.

The money, energy and equipment that went into this to generate comprehensible but meaningless text… are putting a huge question mark on the whole idea.

Remember my words, Google and many others will train models to score the text you publish in terms of ‘organicity’ if there is such a word. They will silently downgrade artificial content to dump it in the landfills out of our beloved internet. (Kudos to those of you, SEO/SEM masters, who started investing in hundreds of meaningless blog pages and product descriptions to ‘boost SEO’, wait for it).

I can see services like ‘Trusted Shops’ appearing on the Horizon that show a badge every time they encounter an article written by a real human, by a real pro of his/her sphere. A distinction one will be proud of.

3) Inability of youth to think and learn the hard things

Sergio, a friend of mine, tells me that ChatGPT helps him write the BigQuery queries so he doesn’t lose time looking at the documentation he has seen before. But this dude is a senior engineer who could come up with an appropriate query himself after a long day of tinkering (without any documentation). Also, being a senior in this space, he would know the result returned to him by ChatGPT would make enough sense to test run.

But if we take his daughter who wouldn’t know the intricacies of BQ and all Google has to offer, and she couldn't distinguish a bad answer from a good one just because she wouldn’t go for a long hunt across our ancient web to find the solutions, mistakes and use cases other people shared online.

The act of active search that is. Undoubtedly, the solution would be taken no matter how feasible it is, or how accurate it might seem to be. Because why waste time skimming through Stack Overflow, looking at people debating, and seeking a solution?

Why read other people making wrong assumptions if I can take a response I can’t verify and use it on production?

4) Companies behind that corpus of data with weird skews between classes of content, unknown agenda and questionable practices.

What data has been scraped, and from where? What is the skew there, where did it all come from? There are no answers here. You will see LLMs pushing some narrative seamlessly given the context given and the corpus one managed to scrape. As the LLMs would select the most popular (hence mediocre solution) - the corpus would shift that ‘target average level’ to the relative ‘centre’ of the dataset. If 80% of the content you scraped is written by the guy named Jack who claims to be a messiah of Atlantis, your content will unnoticeably resemble fandom’s articles, not Wikipedia ones. And you won’t notice it.

5) Data collected, data revealed and data corrupted (not in that order)

A pal I know is busy putting his employer’s legal documents through chatGPT to ‘summarize’ them. Who gets access to those docs, who reads them and how the whole collected corpus of data is owned by respective companies like OpenAI is beyond this conversation today. Someone is always listening, and the data is redundantly copied between the machines out there. The very moment you push your prompt.

But you won’t have to present OpenAI or Google with data. If it wasn’t air-gapped and hidden behind encryption, the data might be used to feed the algorithms without your consent (here is our first legal precedent).

6) As the models will be retrained from time to time to include the ‘new’ stuff out there, the percentage of generated content in the dataset will grow unpredictably.

In the interview with Lex, Marc mentions 2 LLMs that generated a considerable amount of content before their respective owners turned them off (look for the jail-break AIs - Sydney and Dan).

The scale of skews in the training data this whole thing will create is immense, there are no vehicles and mechanisms to control it.

At some point, a big chunk of information we will have online will be watered down by the averages of the averages, a generated content based on previously generated content. There will be lots of it, it costs next to nothing to generate more of it.

The text - a symbolic representation of one’s thoughts we were passionate enough to place online, will become a medium of online generators battling for influence.

Nothing online can be trusted these days, no articles, no blogs, no news mediums.

For now, I’d stick with Substacks full of people who actually care about their reputations as thinkers, but it won’t hold long enough.

Switching to books written and published before 2000. That’s my response to the crisis we face.

By no means, it ain’t a coherent essay one could read, but I wanted to get it out there now, otherwise, I wouldn't publish it at all. It’s been waiting for the past two months on my drive.

P.S. Funnily enough, I am a PM to the bones, I love building and maintaining things.

And frankly speaking, my Luddite statements are not doing any good for me long-term, PMs are not supposed to be critical of the technology.

But I am confident we are not moving towards our dawn but our sunset.

I am down for Opportunity (rover), brilliant ideas of Fairphones, NMN research, machine learning for the industry & cancer research, Falcon rockets, Linux and Monero.

But the new developments in “AI” as the public calls it… That’s a waste of time my kids will have to pay for.

Knowing the demographic issues we face, everything has to be put on the line to make the newborns as educated and wise as possible (I know we have no idea how to achieve that). But arming them with a tool that eliminates the painful & wasteful search from their lives… I don’t know.

It is like giving a man on a remote island a good receiver and literally no tools to fix it.

A small bunch of people will know the world in and out.

And those relying on others for the simplest things.

We are seriously fucked.

I have tried to think of how ML is improving my life, but I couldn’t find many real things. Noize cancelling (that’s not even ML)? Cancer detections? Outlier detection in nuclear plant’s sensor data? The more I think about it, the harder it really gets to think of any real cases that actually help humanity but not the form of society we collectively decided to go for - capitalism.

Ad optimization, viewers recommendations, e-commerce recommendations, auto-tagging of clothing depicted on pictures, churn predictions and insolvency predictions, and insurance probabilities. None of these actually make sense to us, humans. Not in the long run. If we stay long enough, that is. It is no longer guaranteed.

Here are the links to keep you fed:

This is where AI can do some of the most good and some of the least harm.

As I predicted months ago 🔮OpenAI is getting sued for privacy violations!

The web is dying. Not because of some nonsensical war between different versions of web.

What if this is the current meaning of "Brands in the Schmetaverse"?

Coding with ChatGPT, GitHub Copilot, and other AI tools is both irresistible and dangerous

AI is overruling healthcare workers, leading to bad decisions and stress for workers.

Noam Chomsky on ChatGPT: It’s “Basically High-Tech Plagiarism” and “a Way of Avoiding Learning”

ChatGPT is a data privacy nightmare. If you’ve ever posted online, you ought to be concerned

ChatGPT are information black holes designed for prevention of your escape.

I know my writing style and grammar suck.

But I am not writing a book, I am trying to light up an idea in your head.

I am trying to make you keep your kids away from the lying machines as me and you can’t be saved no more.

I have tried to generate a thumbnail to embody the topic of my essay:

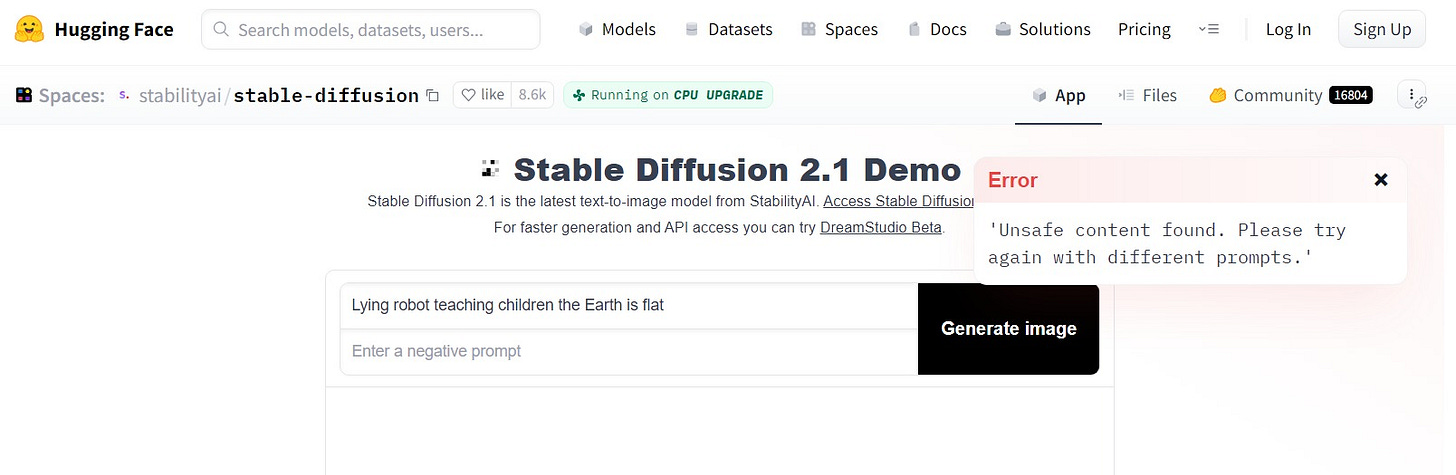

I have tried to do the same on Hugging Face with Stable Diffusion:

For some reason, the content is marked as unsafe. Whatever that means.